GitHub Deployment Report for changes applied to GCP data product

I’m part of a team that owns & manages the platform for an in-house built GCP native data product. This data product is designed based on Data Mesh Architecture.

Data product is made up of domains. This is the architectural design of a domain. All other domains follow this same design.

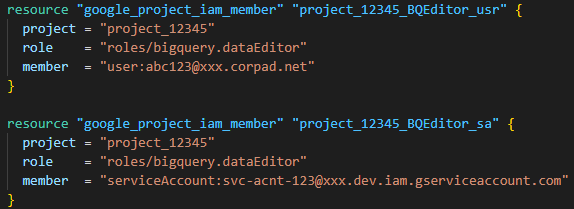

We use Terraform for IaC and GitHub Actions for deploying the Terraform code to GCP projects. This is our current inventory.

- 8 domains (as of today) and more to come

- 10 projects per domain

- 1 GitHub repo per domain

- 3 Terraform workspaces (one per env) per GitHub repo

We deploy changes to the platform almost daily (especially in Dev and QA envs). And, we added ServiceNow integration to our GitHub Actions workflow. So, tracking all the deployments/changes done across repos is a humongous task for us.

GitHub provides APIs to get details of commit activity in the repo. GitHub provided APIs do not provide the details we want in a deployments report. So, I developed a custom process to gather the deployment details from every project in every domain and store them in a BigQuery dataset.

This custom approach I developed can be applied to any type of deployments (besides Terraform) ran through GitHub Actions workflows, and also provides flexibility in building deployment reports using any BI tool like Power BI or Looker as the data is stored in a BigQuery dataset.

This custom process has 2 different steps.

Step 1: In every GitHub Actions workflow we run for deploying the changes to any env across domains, a shell script is executed which gathers these details and write them to a file in a central GCS bucket.

Note: One file per deployment will be created

- Domain name — Data product domain

- Env name — Env within the Data product domain

- User ID — ID of the user running the GitHub Actions workflow

- PR name — Pull Request name

- PR SHA — Unique ID (or hash) of the Pull Request

- PR run status — Completion status (Success or Fail) of the Pull Request

- Change ticket — Change ticket created in ServiceNow for the current deployment

Step 2: We schedule a GitHub Actions workflow to run daily in the non-business hours to perform these tasks.

- Concatenate all the files (created for every deployment run) into a single file.

- Load the data from the single file into a BigQuery table.

- This table can be accessed from Power BI or Looker to hydrate a dashboard.

Note: Timestamp column in BigQuery table provides the capability of generating historical reports.

Disclaimer: The posts here represent my personal views and not those of my employer or any specific vendor. Any technical advice or instructions are based on my own personal knowledge and experience.