Data copy from ADLS Gen2 and AWS S3 accounts into Google Storage bucket

Google provides $300 free Trial period which lasts for 90 days. I activated my free trial and started looking/learning around in Google Cloud Platform (GCP) console.

FYI, this is the resource hierarchy in 3 cloud service providers.

- Azure: Root Management group → Management groups → Subscriptions → Resource groups → Resources

- AWS: Root → Organizations → Member Accounts → Resources

- GCP: Organization → Folders → Projects → Resources

Data is needed for testing any use cases. We can either upload the data files directly to the Google storage account or build a process to transfer the data from other cloud storage accounts like ADLS Gen2 or AWS S3 to Google storage account. I went with the latter approach so that I can test/learn some of the GCP services.

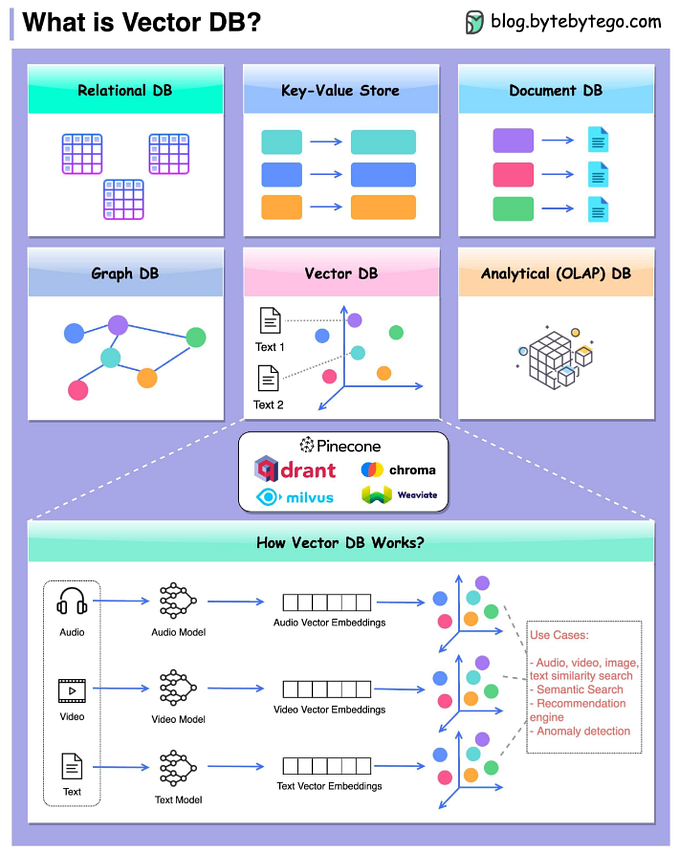

GCP provides 2 options for getting the data from other cloud storage accounts.

- Storage Transfer Service — just for data copy, not for transformation

- Data Fusion — for building pipelines to copy and transform the data

I performed these activities to test/learn about the both services.

- Create ADLS Gen2 storage account and upload a file into that.

- Create AWS S3 bucket and upload a file into that.

- Create Google Storage account.

- Create and execute Transfer service for copying the data from ADLS Gen 2 and AWS S3 storage accounts into Google Storage account.

- Create and execute Data Fusion pipeline for copying the data from ADLS Gen 2 and AWS S3 storage accounts into Google Storage account.

Step 1: Create ADLS Gen2 storage account and upload file into that

I have created the storage account with public access allowed as this is for a test use case. “Allow Blob public access” is not recommended for regular accounts.

Shared access signature (SAS) is needed for ADLS Gen2 storage account for Google Data Transfer Service to extract the data from Azure platform.

Step 2: Create AWS S3 bucket and upload file into that

I have created the storage bucket with public access allowed as this is for a test use case. This is not recommended for regular accounts.

We need Access Key ID and Secret Access Key for the AWS account having the S3 bucket, to be able to copy the file from AWS S3 bucket to GCP storage account.

We can get the Access keys from Identity and Access Management (IAM) Dashboard → Access keys (access key ID and secret access key) → Create New Access Key. You can use existing keys if you already have them.

Step 3: Create Google Storage account

Step 4: Create and execute Transfer service for copying the data from ADLS Gen 2 and AWS S3 storage accounts into Google Storage account

Transferring data from ADLS Gen2 account to GCP storage bucket

Note: Paste the SAS token not the Blob SAS URL.

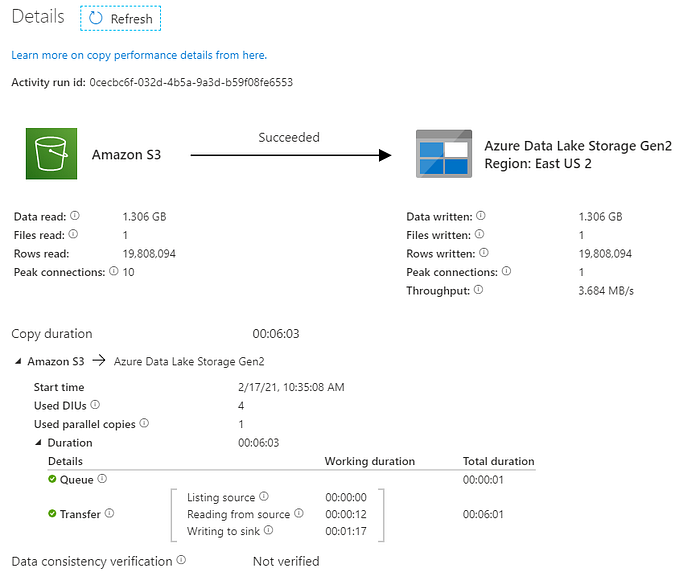

Transferring data from AWS S3 bucket to GCP storage bucket

Step 5: Create and execute Data Fusion pipeline for copying the data from ADLS Gen 2 and AWS S3 storage accounts into Google Storage account

GCP provides Cloud Data Fusion API to create and execute pipelines for data transfer/copy and transformation.

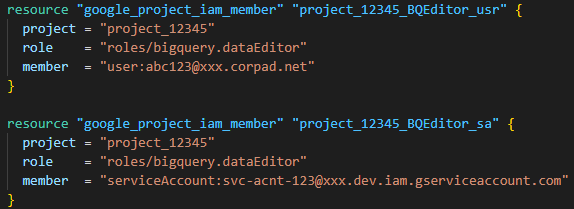

To use this API, service account has to be created first. You can learn more about service accounts here.

Once the service account is created, “Dataproc worker” role has to be assigned to the service account. Pipeline execution fails without this role.

We can proceed with creating an instance once the service account setup is complete.

I chose “Integrate” as my purpose is to create data pipeline.

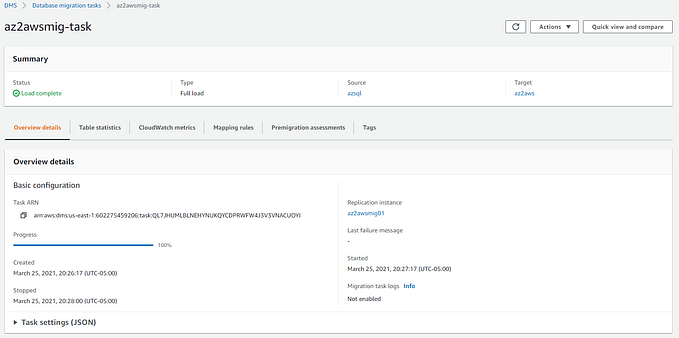

Pipeline for data copy from ADLS Gen2 to GCP storage bucket

To make sure the pipeline works, we can run the pipeline in preview mode before the deployment.

Once the preview run is successful, deploy and run the pipeline.

Pipeline for data copy from AWS S3 to GCP storage bucket

Once the preview run is successful, deploy and run the pipeline.

Disclaimer: The posts here represent my personal views and not those of my employer or any specific vendor. Any technical advice or instructions are based on my own personal knowledge and experience.